Limb IK, previously Arm IK, is in tow with the new Character Animator. The latest release of Character Animator’s lip-sync engine - Lip Sync - improves automatic lip-syncing and the timing of mouth shapes called “visemes.” Both viseme detection and audio-based muting settings can be adjusted via the settings menu, where users can also fall back to an earlier engine iteration. MetaBeat will bring together thought leaders to give guidance on how metaverse technology will transform the way all industries communicate and do business on October 4 in San Francisco, CA. And stop-motion animation studios like Laika are employing AI to automatically remove seam lines in frames. Pixar is experimenting with AI and general adversarial networks to produce high-resolution animation content, while Disney recently detailed in a technical paper a system that creates storyboard animations from scripts. Several - including Speech-Aware Animation and Lip Sync - are powered by Sensei, Adobe’s cross-platform machine learning technology, and leverage algorithms to generate animation from recorded speech and align mouth movements for speaking parts.ĪI is becoming increasingly central to film and television production, particularly as the pandemic necessitates resource-constrained remote work arrangements.

#AUTOMATIC LIP SYNC ADOBE ANIMATE SOFTWARE#

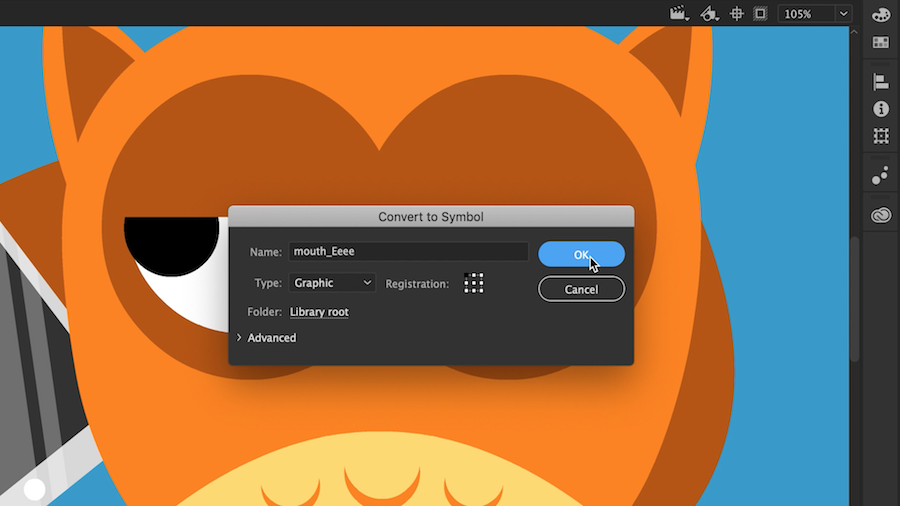

In a paper that has been prepublished on arXiv, the two researchers at Adobe Research and the University of Washington introduced their deep-learning-based interactive system that automatically generates live lip syncing for layered 2-D animated characters.Were you unable to attend Transform 2022? Check out all of the summit sessions in our on-demand library now! Watch here.Īdobe today announced the beta launch of new features for Adobe Character Animator (version 3.4), its desktop software that combines live motion-capture with a recording system to control 2D puppets drawn in Photoshop or Illustrator. Poor lip syncing, on the other hand, like a bad language dub, can make or break the technique by ruining immersion. It allows the mouths of animated characters to move appropriately when speaking and mirror the performances of human actors.

Live lip syncingĪ key aspect of live 2D animation is good lip syncing. Machine learning is allowing all of this to run even more smoothly and keep improving. The live animation technique uses facial detection software, cameras, recording equipment, and digital animation techniques to create a live animation that runs alongside a real acted scene. One recent example of the medium saw Stephen Colbert interviewing cartoon guests, including Donal Trump, on the Late Show.Īnother saw Archer's main character talking to an audience live and answering questions during a ComicCon panel event.

The first-ever live cartoon, an episode of The Simpsons, was aired in 2016. It seems that Homer's was a prophetic vision as in 2019, l ive 2D animation is a powerful new medium whereby actors can act out a scene that will be animated at the very same time on screen. It’s a terrible strain on the animators’ wrists.” A voice actor jokingly replied: “very few cartoons are broadcast live.

0 kommentar(er)

0 kommentar(er)